High-Level Language

A high-level language is a programming language designed to simplify computer programming. It is "high-level" since it is several steps removed from the actual code run on a computer's processor. High-level source code contains easy-to-read syntax that is later converted into a low-level language, which can be recognized and run by a specific CPU.

Most common programming languages are considered high-level languages. Examples include:

|

|

Each of these languages use different syntax. Some are designed for writing desktop software programs, while others are best-suited for web development. But they all are considered high-level since they must be processed by a compiler or interpreter before the code is executed.

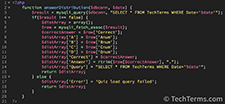

Source code written in languages like C++ and C# must be compiled into machine code in order to run. The compilation process converts the human-readble syntax of the high-level language into low-level code for a specific processor. Source code written in scripting languages like Perl and PHP can be run through an interpreter, which converts the high-level code into a low-level language on-the-fly.

Test Your Knowledge

Test Your Knowledge