Edge Caching

Edge caching is a mechanism content delivery networks (CDNs) use to cache Internet content in different locations around the world. Examples include website data, cloud storage, and streaming media. By storing copies of files in multiple "edge" locations, a CDN can deliver content to users more quickly than a single server can.

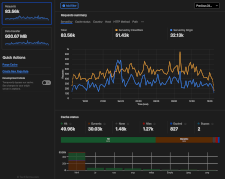

CDNs may have tens or hundreds of global data centers. Each data center contains edge servers that intelligently serve data to nearby users. In most cases, edge servers "pull" data from an origin server when users request content for the first time. Once an edge server pulls an image, video, or another object, it caches the file — typically for a few days or weeks. The CDN then serves subsequent requests from the edge server rather than the origin.

Tiered Caching

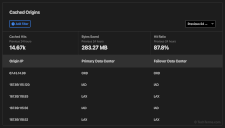

A CDN may automatically propagate newly pulled content to all servers or wait for each server to request the data. Automatic propagation reduces trips to the origin server but may result in unnecessary duplication of rarely-accessed files. Waiting for local requests is more efficient but increases trips to the origin server. Modern CDNs use tiered caching to balance the two methods. The first time a user accesses a file, the CDN caches it on the local edge server and several "primary data centers." It reduces unnecessary propagation of cached files and limits trips to the origin server.

Edge Caching Benefits

Edge caching reduces latency, providing faster and more consistent delivery of Internet content to users around the world. For example, a user in Sydney, Australia, may experience a two-second delay when accessing a server in Houston, Texas. If the data is cached in Australia, the delay may be less than one-tenth of a second.

While the primary purpose of edge caching is to improve content delivery speed, it also provides two other significant benefits: bandwidth reduction and redundancy.

Edge caching reduces Internet bandwidth by shortening the distance data needs to travel to each user. Local edge servers reduce Internet congestion and limit traffic bottlenecks. Replicating data across multiple global data centers also provides redundancy. While CDNs typically pull data from an origin server, individual data centers can serve as backups if the origin server fails or becomes inaccessible.

Test Your Knowledge

Test Your Knowledge