Byte

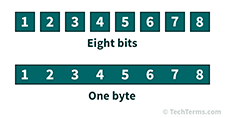

A byte is a data measurement unit that contains eight bits, or a series of eight zeros and ones. A single byte can be used to represent 28 or 256 different values.

The byte was originally created to store a single character since 256 values are sufficient to represent all lowercase and uppercase letters, numbers, and symbols in western languages. However, since some languages have more than 256 characters, modern character encoding standards, such as UTF-16, use two bytes, or 16 bits for each character. With two bytes, it is possible to represent 216 or 65,536 values.

A small plain text file may only contain a few bytes of data. However, many file systems have a minimum cluster size of 4 kilobytes, which means each file requires a minimum of 4 KB of disk space. Therefore, bytes are more often used to measure specific data within a file rather than files themselves. Large file sizes may be measured in megabytes, while data storage capacities are often measured in gigabytes or terabytes.

NOTE:One kibibyte contains 1,024 bytes. One mebibyte contains 1,024 x 1,024 or 1,048,576 bytes.

Test Your Knowledge

Test Your Knowledge