Integrated Circuit

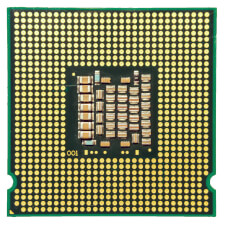

An integrated circuit (IC) is a small semiconductor chip that contains multiple interconnected electronic components, such as transistors, resistors, and capacitors. These components work together to perform specific functions, such as amplifying signals, processing data, or storing memory. ICs are the foundation of modern electronics, powering everything from smartphones and computers to appliances and automobiles.

Integrated circuits typically have a silicon base and are manufactured using photolithography, which uses light to transfer patterns onto the silicon chip. This process allows engineers to create microscopic circuits with components smaller than the human eye can see. Unlike traditional circuits built with individual components, integrated circuits offer greater efficiency, lower power consumption, and higher reliability.

Since their invention in the late 1950s, integrated circuits have revolutionized technology, enabling the development of smaller, faster, and more powerful electronic devices. Advancements such as system-on-a-chip (SoC) designs (like Apple Silicion) now integrate entire computing systems onto a single chip, further pushing the boundaries of performance and miniaturization.

Test Your Knowledge

Test Your Knowledge