Gigahertz

A gigahertz (GHz) is a unit of measurement for frequency, equal to 1,000,000,000 hertz (Hz) or 1,000 megahertz (MHz). Since one hertz means that something cycles at a frequency of once per second, one gigahertz means that whatever is being measured cycles one billion times per second. While it can measure anything that repeats within that range, in the context of computers and electronics it often refers to the speed of a computer's processor or the radio frequency of Wi-Fi and other wireless communication.

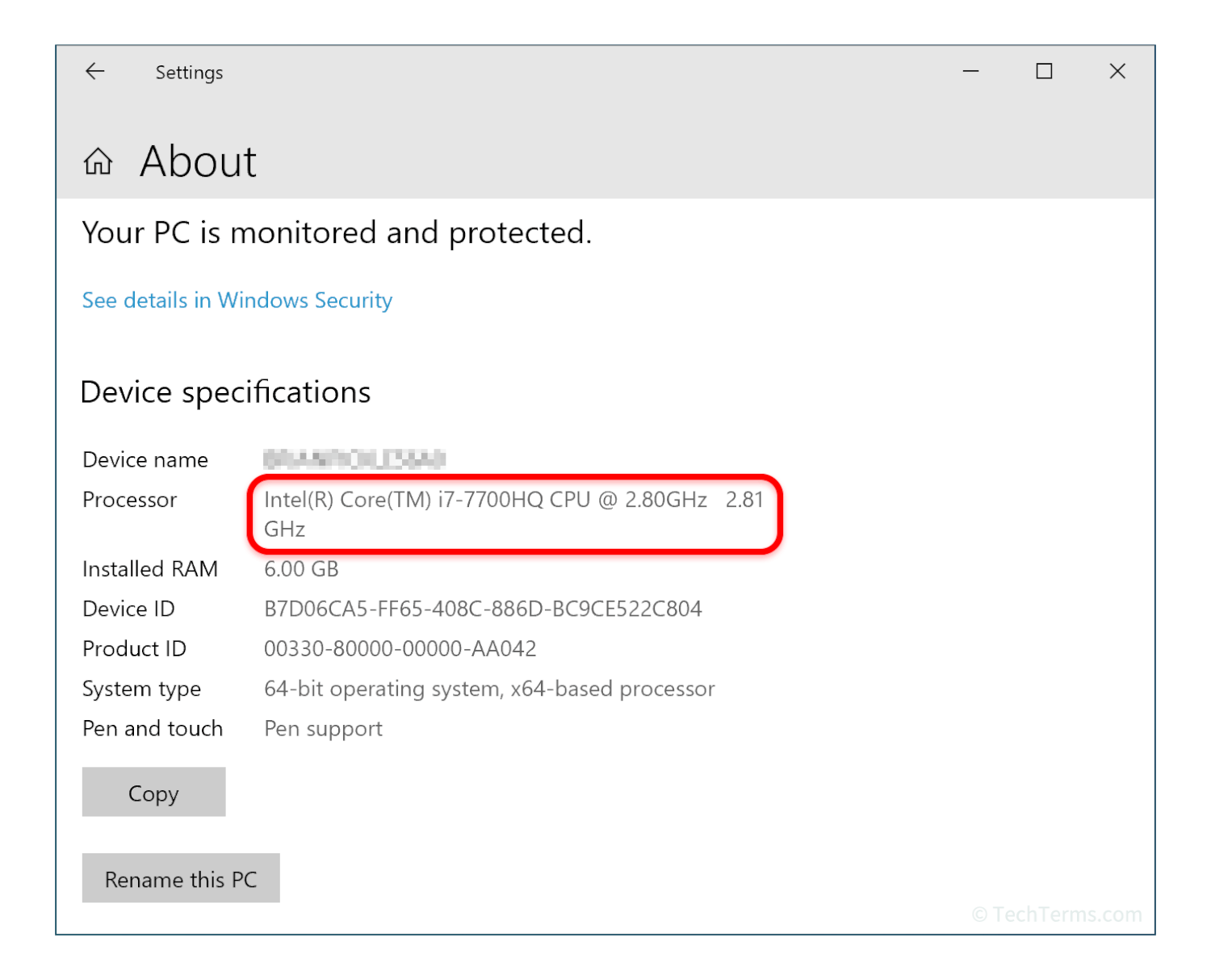

For decades, the clock speed of a computer's CPU was measured in MHz, with higher clock speeds generally indicating a faster processor. In the early 2000s, Intel, AMD, and IBM all released processors with clock speeds over 1,000 MHz, so they began measuring clock speeds in GHz rather than MHz. Within several years of the first 1 GHz processors, new processors had clock speeds over 4 GHz.

Running a processor at high clock speeds requires significant power and produces a lot of heat. After reaching the 4-5 GHz range, chip designers stopped emphasizing clock speed and improved processor performance by other methods like adding more processor cores. For example, a single-core Pentium 4 from 2005 and an 8-core i7 from 2021 run at similar clock speeds (between 3.2 and 3.8 GHz), but the modern i7 performs significantly faster.

In addition to processor clock speed, gigahertz is used to measure the frequency of certain radio waves. Wi-Fi networks operate over multiple bands in this part of the spectrum depending on the technology used, including the 2.4 GHz band (for 802.11b, g, and Wi-Fi 4), the 5GHz band (for Wi-Fi 5 and 6), and the 6 GHz band (for Wi-Fi 6E and 7). Bluetooth wireless connections also use the 2.4 GHz band for short-range personal networks, as do other wireless audio, video, and smart home devices. Finally, some 5G mobile networks use radio waves measured in GHz — mid-band networks transmit between 1.7 and 4.7 GHz, and high-band networks use frequencies between 24 and 47 GHz.

NOTE: Other computer components also have clock speeds that can be measured in GHz, like DDR4 and DDR5 memory.

Test Your Knowledge

Test Your Knowledge